Agents Broke Our Old Infrastructure. Now We Get to Rebuild It.

January 23 2026

By Chang Xu

Developer tools and infrastructure have been a challenging category for founders and investors. The problems were real, but the market became crowded, sales cycles could be long, and successful products often got absorbed into larger platforms before reaching scale.

AI agents changed the mood. They unlocked workflows and introduced novel requirements that are forcing the entire infrastructure layer to be rethought and rebuilt, creating newfound opportunity.

Here are six places where I think the rules are being rewritten.

1. Secure code execution sandboxes are suddenly core infrastructure

In the old world, “we run your containers and manage scaling” was not a compelling pitch. Too many companies, too little differentiation, and the economics were rarely great.

Agents created a new center of gravity. Agents write code, run code, install packages, clone repos, and touch the network. Running that inside your real environment is an unnecessary risk.

So now the question is: how do you run untrusted code with a contained blast radius and configurable permissions?

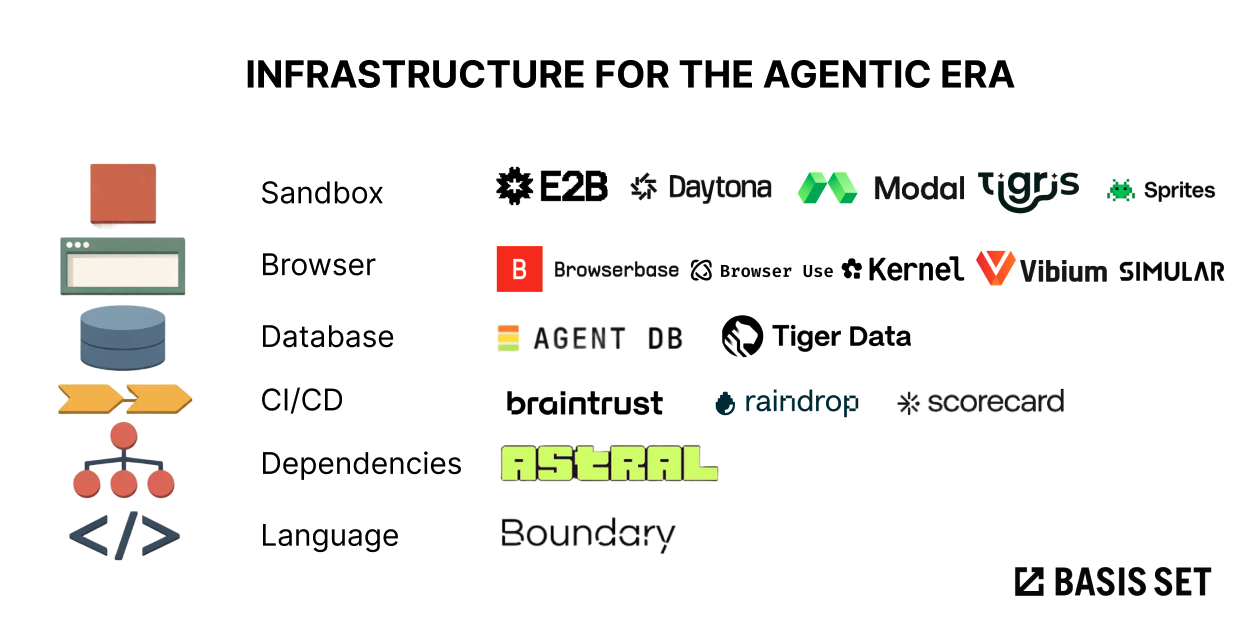

This is why sandboxes are exploding in adoption. E2B, Daytona, and Modal all emphasize secure isolated environments for agent code execution. Fly.io just launched Sprites.dev with stateful sandbox environments and checkpoint restore, explicitly positioned for agent workflows.

The same principle applies to data. Tigris brings copy-on-write bucket forking to object storage, letting each agent work on an isolated clone without duplicating terabytes or risking write conflicts.

This logic extends to mainstream agent products as well. When you start a session in Claude Code's web interface, it clones your GitHub repository into a sandbox environment, letting you vibe code freely within a contained blast radius. Anthropic has continued adding sandboxing boundaries for safer, more autonomous execution. (anthropic.com)

Infrastructure as a service felt commoditized in 2024. Secure execution sandboxes changed that calculus entirely.

2. Browser automation is shifting from QA to agents

Browser automation has been around for decades: Selenium, Puppeteer, Playwright. Historically, the dominant use case was testing. Make sure login works. Make sure checkout works. Make sure the flow did not break.

But the market never felt clean. QA automation is hard, adoption is messy, and plenty of companies ended up looking like services with a UI.

Agents unlocked a different wave: browser automation as a general-purpose capability for agentic workflows and data collection. Companies like Browserbase and Browser Use are getting real traction because they recognized this shift early. The key unlock is incorporating foundation models as the eyes and ears of automation, enabling use cases that scripted selectors could never handle reliably.

The most interesting part is that the underlying assumptions may need to change. A lot of browser tooling was designed for scripts, not agents with context window constraints and tool bandwidth limits. It would not surprise me if we see more stacks move closer to the Chrome DevTools Protocol layer to give agents more structured control. Browser Use’s CDP focused library is a concrete example of this direction. (GitHub)

At the infrastructure layer, Kernel is rethinking how browser environments spin up, optimizing for the fast, ephemeral sessions that agent workflows demand. At the framework layer, while Playwright has become the default for scripted automation, newer projects like Vibium are designing for a different assumption: that agents can reason about navigation rather than just execute predefined paths. Simular extends this to full computer use, offering production-grade desktop automation that can run thousands of sequential steps across nearly any application. The entire stack is being reconsidered.

3. Databases are going to need an agent-native interface

If you pitched "a new database" in 2024, it would have been a tough conversation. The space was mature, incumbents were entrenched, and the path to adoption looked long.

But agents do not use databases the way humans do.

Humans and hand written apps usually know what they want. Agents are explorers. Sometimes they are brilliant. Sometimes they are confidently lost.

If a database is a mansion with 100 rooms, giving an agent the key and a schema is not enough. It needs a tour guide. It needs popular routes. It needs guardrails.

This idea is already showing up in early tools. AgentDB offers an MCP server that spins up file-backed databases instantly, with templates that help agents use schemas correctly. Tiger Data is building Agentic Postgres with features like zero-copy forking, letting developers or agents spin up isolated branches of production databases in seconds for safe experimentation.

My bet is we will get more “agent native” database interaction layers, and maybe entirely new database products designed around agent behavior.

4. CI and CD are about to feel painfully slow

The old CI/CD pipeline timings were fine when it took you days to write a feature.

Now you can get to a first draft in minutes, then you wait. And wait. And wait.

The loop is the real problem. CI fails, you paste the error into the model, it fixes something, you push again, you wait again. That is an embarrassing control system.

We need an agentic inner loop that runs in a sandbox, simulates the relevant checks, iterates until stable, and then hands off to formal CI. Boris Cherny, the creator of Claude Code, described a tight feedback loop where Claude tests changes via a browser workflow before shipping. I find myself reaching for this pattern constantly.

An emerging category of startups is exploring what the agentic loop looks like for evaluations and testing. Braintrust provides an eval platform with native CI/CD integration, automatically running experiments and posting results to pull requests. Raindrop is building "Sentry for AI agents," catching silent failures in production and offering A/B testing for agent changes. Scorecard, built by the team that scaled Waymo's simulation infrastructure to millions of daily scenario tests, lets teams run tens of thousands of agent evaluations and plug them directly into CI pipelines.

These tools are early signs of what is coming. The CI/CD stage of the traditional SDLC is due for reinvention, and I expect we will see much more innovation here.

5. Package managers and dependency trust become urgent when agents write code

Package managers have never been a fun business. Massive adoption, painful monetization.

But agents make dependency ingestion more aggressive, and the open source supply chain is under sustained attack. npm has seen widespread compromises, and a newer threat called slopsquatting has emerged: attackers register package names that LLMs commonly hallucinate, waiting for an agent to install them. (h/t Taylor Clauson)

There is more room for reinvention here than people assume. Astral's uv is a Rust-based Python package installer and resolver, designed as a drop-in replacement for pip workflows. It spread fast because it is simply better: faster, more reliable, and more ergonomic.

This creates room for an agent-era dependency layer: provenance tracking, allow lists, policy enforcement, and safer defaults that let agents pull only from trusted packages. Think of it as supply chain security designed for agentic infrastructure, something like Chainguard but built around agent workflows and software bill of materials requirements.

6. Languages and reliability layers for LLM outputs

Most mainstream languages predate agents. They lack primitives for the patterns that agentic code demands: streaming partial outputs, parallelizing independent LLM calls, handling non-deterministic responses gracefully.

BoundaryML's BAML is designed around these constraints. It provides TypeScript-like type safety for Python teams, automatic parallelization of LLM calls without writing async code, and streaming with partial type guarantees mid-stream. The language generates native clients for Python, TypeScript, Go, and other languages from a single source of truth. (Full disclosure: we’re proud investors of BoundaryML)

The deeper bet is that BAML is designed to be readable by both humans and LLMs. As more code gets written by agents, a language optimized for LLM comprehension and generation could become a compilation target for higher-level tools.

Programming languages have historically been hard to monetize. But when the language is tightly coupled to observability, schema versioning, and production debugging, the commercial surface area expands significantly.

Infrastructure for the agentic era

Secure sandboxes, browser automation, agent-native data access, CI loops that match vibe coding speed, dependency trust for agents pulling packages, and purpose-built languages for LLM outputs. These are not edge cases. They are the foundational layers of the agent era.

If you are building in this space, we would love to hear what you are working on!

Disclosure: We are proud investors in Tigris, Simular, and BoundaryML.